Last fall, I decided to try to learn how to color grade properly after seeing the amazing things Steve Yedlin was doing. It was amazing how much control he was able to extract from the footage of nearly any camera, by fully understanding how the color and post process pipeline works in combination with on set practices. I have been watching all the same tutorials, YouTube videos and workshops as everyone else. One thing I now know… despite how much I have learned, I realized I know almost nothing. That fact became even more apparent last week, especially considering what I learned since.

I was wrong about what I was seeing in the initial test. While impressive to someone who took the footage at face value, it was really nothing miraculous. The difference being, I simply followed along on the workflow of someone else — Juan Melara — and did it “right” for once.

While it looked like initially that I was unlocking some added dynamic range, I was really just setting up the project in a more advanced, and more advantageous starting point; a starting point that let me see what the SIGMA fp was actually capturing, and work with it in a way that was familiar and effective. The fp footage seemed to take on better image reproduction qualities and seemed to perform nearly as well as the much more expensive and impressively specced Panasonic S1h. So while the results are not false by any means, I did a few things… wrong. Well not wrong, inefficient and clumsy is more the right description.

Getting Other Opinions

After posting my findings, I was contacted by a few colleagues who specialized in color and post. They could see that I was doing it “right”, but that I didn’t really know WHY it was right. This is why the film community is so important. There is no way I could have learned as much as I did without the time other people gave me. Mentorship is a priceless thing.

I had a multi hour conversation with a more experienced resolve user Tim Kang, colorist Juan Salvo and briefly Deanan DeSilva. They explained that Cinema DNG being an open source format means that there is not necessarily a set way of filling that container with information. So what happens is you are at the mercy of how the camera is going to display the data from within the CDNG container. Then, there is the issue of how the editing/grading software is going to take that and display it to you. They may not line up.

Of course, you can just work with the RAW CDNG from the SIGMA fp as is. There is nothing wrong with that method. For me, I was never happy with the highlight and shadow handing out of camera. It felt too crunchy. To help speed up the process of getting the footage where I want it, taking advice from both Tim and Juan, I found what works best for me. The method transforms the CDNG into linear space and converts it to ARRI Color and LogC, just like I did in that first test. However, the difference is how I got there.

Color Science

I chose ARRI color science and LogC as an output because it has the most established workflow overall. I have tons of looks, grades, and plugins that work with Alexa footage. (A quick side note, Tim Kang also showed me a method that’s very clever, for working with LOG files; with transformations that make them behave as as if they were RAW. I will detail that in another post.)

Essentially, RAW is just RGB values coming off the sensor with no “Color Science” applied yet. Theoretically, if you were to de-bayer the image only and record the straight linear RGB values off the sensor, and record that uncompressed — before any image conversions — you would have something very close to RAW.

There are major manufacturers who have to manipulate their RAW output in a “Half Baked In” method similar to this to get around patents for recording compressed RAW. The sensor data would be de-bayered, but it wouldn’t have any “white balance” or look baked in because its in linear gamma. White balance being a function of the balance between RGB levels being manipulated mathematically, then ran through the selected color science to take the linear values and convert it to a visible image that makes sense, like 709 or various LOG formats.

Linear space looks like it has about 3 stops of dynamic range when viewed in a traditional color space. Lots of the image is so dark it looks black, there is maybe one or two stops worth of “mids” then the rest blows out white and is over saturated. This is just because the traditional color space and gamma spaces can’t interpret the data correctly because there is no curve applied.

So, since linear space is mathematically similar to RAW in that it’s just manipulating RGB values to take data and fold it to a curve that looks good in a set color space, you can use the color space transform tool in Resolve to do some interesting things. You can make RAW-like image manipulations in the steps between conversion to Linear/P3 and the output to ARRI space/LogC.

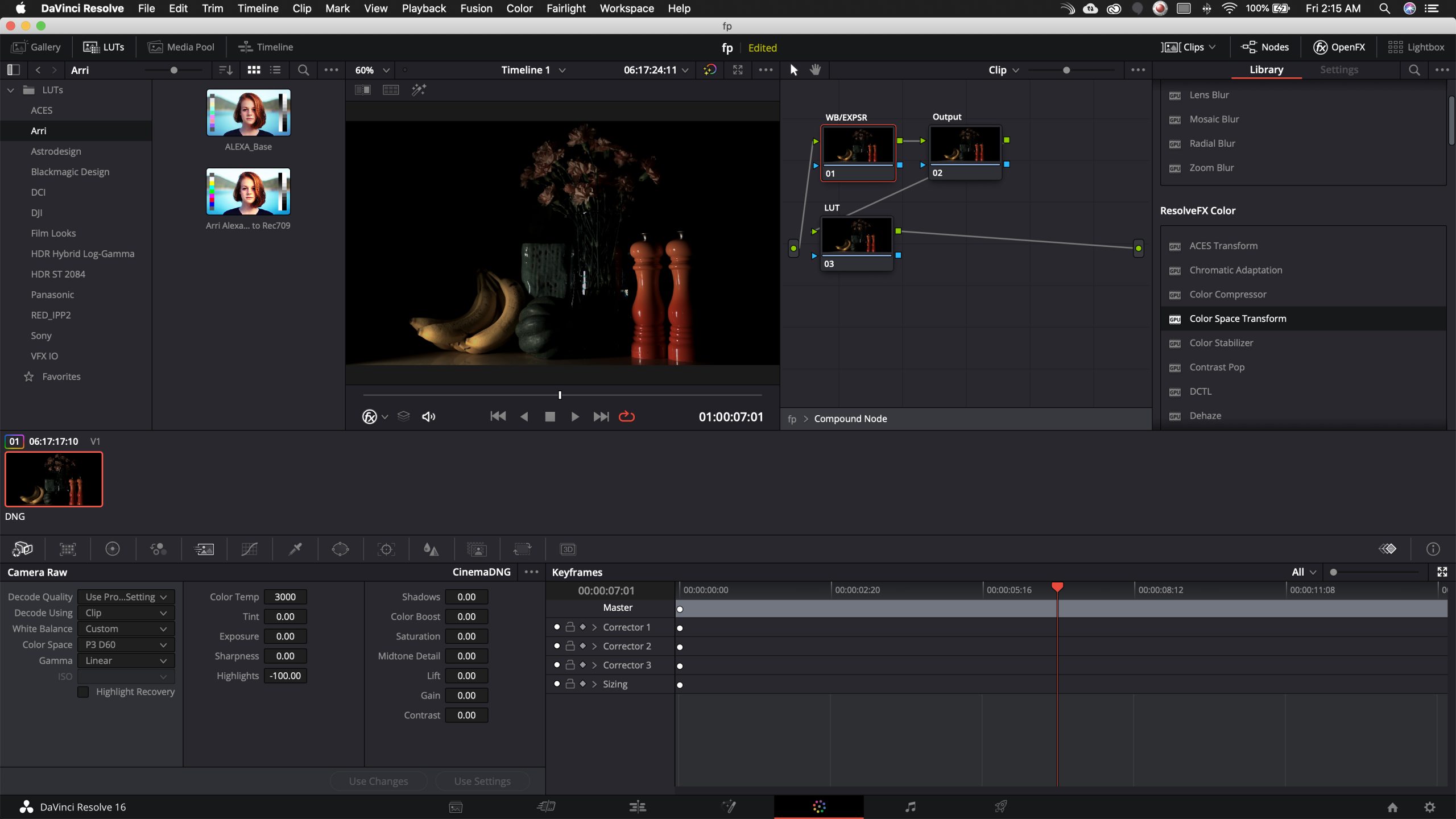

The nodes in between these steps sort of act like the DSP in the camera now. By taking Cinema DNG RAW and setting it up in DaVinci with the RAW tab to be linear in output with the widest color gamut it allows, which is P3D60 and Linear, you can make your adjustments to WB, color, contrast and “ISO” with the nodes in between the input and output color space nodes. This lets you get the image to the right place BEFORE you convert it to a readily viewable grading starting point, the ARRIcolor/LogC output of the node tree.

Think of it as a prep cook meticulously selecting the very best from a pile of ingredients, then preparing them prior to handing it over to the chef. The intentional choice of which piece of meat, the very best vegetable, the consistent cuts and attention to detail, will help everything cook more evenly and in the end, taste just a little better than just chopping everything up and throwing it in a pot.

Building Your Own Starting Point

The reason I prefer to do it this way, as opposed to using the built in RAW adjustments in Resolve: I don’t love how Resolve treats the SIGMA fp footage natively; this method bypasses their process. I can essentially build my own starting point. One that shows all the dynamic range the image has to offer, in the Log format of my choice.

For arguments sake, I’m going to use ARRI LogC as its pretty much ubiquitous at this point in the industry. Here is a very important note though; LogC and ARRI color, despite going through some very accurate transforms between colorspaces and gammas, ultimately are set up for an ARRI camera’s sensor. So just be aware, your footage may need a little tweak to work with the ARRI luts. However, you can easily make those tweaks on the “in between transform” nodes to get it to a nice place.

Video Breakdown

Watch this video showing the process, and below that are some screen grabs to help explain the process in writing — useful as a reference to come back to if you decide to try it.

Step-By-Step Image Breakdown

Reference:

The master Color management settings for the project.

The starting point:

Decode: Clip

White balance: As close to what you think you shot the footage at, or a little off if you prefer warmer or cooler, but it wont matter cause you can adjust it in the WB node with the Linear RGB values.

Color Space: P3 D60 ( the widest one CDNG allows, if using R3D or ARRIRAW you can choose the native colorspace, plus when using other RAW formats you will get more options, CDNG only has REC709, Blackmagic Color and P3D60, I don’t use the BMcolor space because there is no transform option for it in the ColorSpace transform too)

Gamma: Linear

Highlight Recovery (off)

Then I make three nodes:

1: White balance/Exposure

2: Output Transform

3: LUT

Also, very important, make sure under the Color Wheels tab, you turn LumMix to Zero for the WB/Exposure node. It basically messes with saturation as you change values to keep the saturation and luminance in check, but since we are looking for a more “manual” way of doing things, it can cause problems in this stage in the Linear space.

Next thing you do is on the Output Node, add the Colorspace Transform tool. Don’t touch the other nodes yet.

Set it to:

Input Color space: P3-D60,

Input Gamma: Linear

Output Color Space: ARRI Alexa

OutPut Gamma: ARRI LogC

(You can also do RedWideGamut/Log3G10 or V-Gamut vLog for output color. Make sure the gamma and color space are matching for now, unless you really know what you are doing or are planning on grading fully manually with no camera luts.)

Its all about getting to a nice starting point with SIGMA fp CDNG files. Remember this is just one way of 100 to grade footage. But I like how this gives me control before and after the point where the Log image is created, and the looks are applied. Also, making changes before the conversion to log will have much bigger effect down the pipeline, because you are “changing the ingredients”, to stick with the cooking analogy.

Found this on vimeo last night.. just really did a number on my FP footage.. really great tutorial. I shoot CDNG for both my main cams.. do you think this workflow would work for odyssey 7q CDNG coming out of Fs5 or Fs700?

Great article — really got me started looking for the best way to deal with fp DNG’s.

Resolve has added Blackmagic’s colorspaces in the CST in 17, and I have found Blackmagic Design (Color Space) and Blackmagic Design Film (Gamma) to work quite well, particularly with reds.